What Happened? Gaia today launched the Gaia AI Phone—an on-device, decentralized AI smartphone that processes language models locally and doubles as a network node. The 7,000-unit limited run debuts in Korea and Hong Kong at $1,399, with owners able to contribute compute to the Gaia Network and earn $GAIA.

Gaia’s AI phone bets on a fully local model—and turns every buyer into a network participant

Gaia Labs is taking a maximalist swing at the “AI phone.” The startup opened pre-orders today for the Gaia AI Phone, a limited run of 7,000 devices that process language models fully on-device and operate as a node in a decentralized compute network. The pitch is unambiguous: users should own their models, their data, and a slice of the network their phones help power. Pricing starts at $1,399, with an initial rollout in Korea and Hong Kong and a public presale window beginning September 2. Gaia plans a live demo and broader reveal at Korea Blockchain Week later this month.

Gaia’s framing taps a rising anxiety in crypto and open-source circles: as Silicon Valley moves AI assistants into every pocket, control and economics are gravitating to cloud platforms. The company says its handset compresses models that once required data-center infrastructure into a package efficient enough for offline inference and secure enough to act as a full network participant—shifting both processing and value accrual to the edge. That positioning echoes a wider industry pivot toward “AI phones,” but Gaia pushes the logic to its endpoint by removing cloud dependence altogether.

The announcement: a phone that is also a node

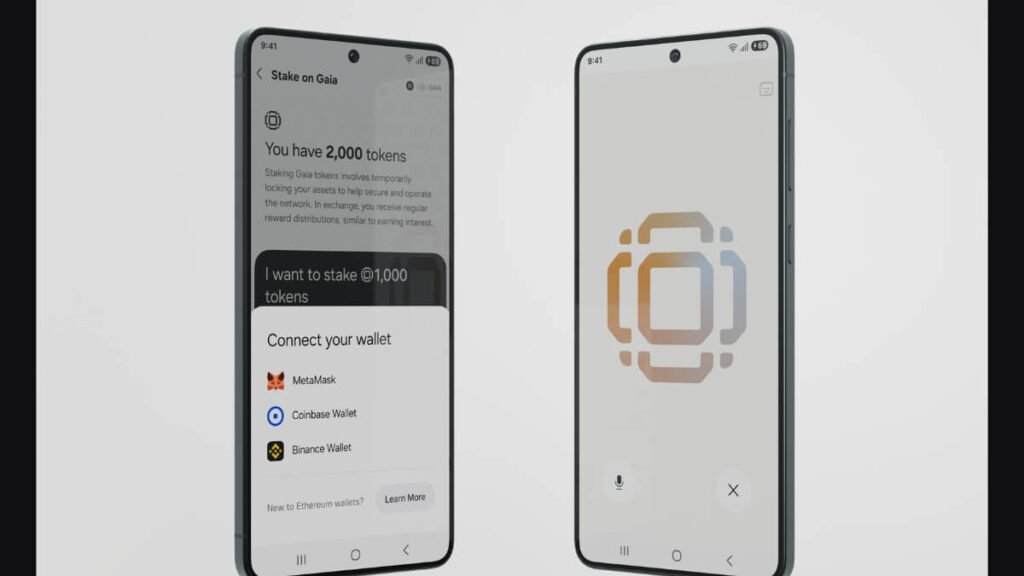

The device runs an “open AI operating layer” on Galaxy S25 Edge-class hardware and ships with Gaia’s stack: a local node runtime for LLMs, a voice-to-agent interface, on-chain identity, an agent launcher, MCP compatibility for tools, and a pre-loaded $199 “Gaia Domain.” Early adopters gain partner perks and can earn $GAIA tokens by contributing compute to the network. Deliveries are slated to begin later this year, with a live inference demo planned for Seoul.

Gaia says the phone performs both local and network-assisted inference, allowing owners to keep sensitive interactions offline while opting into network workloads for rewards. The company also emphasizes optionality: the phone runs standard Android apps from Google Play, with Web3 features disabled by default for users who just want a privacy-first AI phone.

Why it matters: AI is moving on-device—fast

Independent forecasts suggest Gaia’s timing is shrewd. Counterpoint Research projects more than 400 million generative-AI capable phones to ship in 2025, roughly a third of the market, with momentum accelerating through the decade. IDC also projects a rapid ramp in “next-gen AI” devices, arguing that AI capability will become pervasive across personal computing by 2027. These estimates imply a massive installed base ready to exploit on-device inference—if user experience meets expectations.

Mainstream platforms are already normalizing local AI. Google’s Pixel line runs Gemini Nano for on-device tasks, adding multimodal features and offline utility in Android. Apple’s Private Cloud Compute tries a hybrid tack—doing what it can on iPhone, then securely offloading heavier tasks to a privacy-hardened Apple cloud. Samsung, meanwhile, positions “Galaxy AI” as the anchor of an “AI phone era,” promising wider device coverage. Gaia’s claim to “complete AI sovereignty” is thus less about first principles than degree: how much can you keep local, and when can you avoid the cloud entirely.

The hardware backdrop: phone silicon finally has the headroom

The leap to on-device LLMs has been enabled by model compression techniques and surging NPU performance in flagship chipsets. Samsung’s Exynos 2500—paired with some Galaxy S25 variants—boasts up to 59 TOPS of AI inferencing, a sizable jump over the prior generation and useful headroom for quantized 7B–8B models. Qualcomm has showcased on-device Stable Diffusion and is optimizing Meta’s Llama 3 for Snapdragons. Taken together, the industry’s silicon stack is now credible enough to host assistants, summarizers, and multimodal perception locally, with trade-offs relative to cloud-scale models.

Gaia’s approach tries to ride this curve while retaining a sovereign default: even when network compute is available, the system prioritizes local inference for privacy and latency, pushing to the network only when the user explicitly opts in for rewards or needs additional horsepower.

Tokens, incentives, and the DePIN playbook

Under the hood is an economic thesis familiar to decentralized physical infrastructure networks (DePIN): distribute workloads and incentives to the edge, and user-owned devices will bootstrap useful coverage and compute. Helium’s people-powered wireless network, for example, rewarded hotspot operators and subscribers for expanding coverage and sharing anonymized mapping data; its subscriber base crossed 250,000 this year while experimenting with tokenized incentives and “Cloud Points.” Gaia adapts that logic to AI workloads, encouraging owners to contribute inference cycles and earn $GAIA while preserving control of local interactions.

Analysts see room for this model to grow. Messari pegs DePIN’s total addressable market in the trillions across networking, compute, and sensor networks, even if current capitalization remains a fraction of those end markets. If Gaia’s phone can reliably serve as a network node without draining batteries or compromising UX, it could mesh neatly with the broader DePIN arc.

Standards and agents: MCP in your pocket

A notable detail is MCP compatibility—the open Model Context Protocol that standardizes how models connect to tools and data. MCP is quickly becoming a lingua franca for AI agents across vendors, with Anthropic, AWS, and others promoting cross-tool interoperability. Shipping MCP on a phone is a developer bridge: build once for agents, and those capabilities follow the user between local and network contexts. For a handset that wants to be both private and extensible, that matters.

Lessons from recent “AI hardware” cycles

Gaia is not the first to rethink the phone. Crypto-native Solana Mobile proved demand exists when its Chapter 2 racked up tens of thousands of preorders in days. But AI-native gadgets have struggled: Humane’s AI Pin was recalled over a battery hazard and ultimately shut down after HP acquired assets, while Rabbit’s R1 earned bruising reviews for underdelivering versus a smartphone baseline. Gaia’s advantage is pragmatic—embracing a premium Android base and augmenting it with a deep AI/decentralization stack, rather than trying to replace the phone with a niche device.

Privacy as a product feature, not a paragraph

User sentiment aligns with Gaia’s bet on data sovereignty. Cisco’s 2024 privacy research found 62% of consumers worry about how organizations use AI, and a majority say privacy laws affect their trust. The 2025 benchmark survey of security leaders echoes that privacy investments drive tangible business outcomes. If AI assistants are to handle messages, locations, photos, and payments, more consumers may prefer assistants that never leave the device—at least for default tasks.

Apple’s push with Private Cloud Compute shows even incumbents must explain when and how data leaves the handset. Gaia’s stricter default—local first, then opt-in to a user-owned network—is a clean narrative that could resonate in Web3-heavy markets like Korea and Hong Kong.

Regulatory overhang: the EU AI Act and the smartphone edge

Even if Gaia’s models run locally, regulations will shape design choices. The EU AI Act imposes obligations on high-risk systems and transparency requirements for many consumer-facing AI features. Edge execution does not exempt providers from documenting risks or complying with disclosure rules, especially for generative content and biometric capabilities. Phones that blend on-device models with network inference will need governance that spans both contexts.

What to watch at Korea Blockchain Week

Korea Blockchain Week runs September 22–28 in Seoul, with main-stage programming around September 23–24. Gaia plans live demos of on-device inference and node participation—likely the best test of whether the handset’s dual mandate holds up under pressure. If performance is close to cloud-based assistants for common tasks, the case for a fully local default strengthens. If workloads frequently overflow to the network, Gaia will need to prove the economics are compelling and the power draw manageable.

The competitive frame: incumbents vs. sovereignty maximalists

Tech giants now ship AI-first phones with hybrid inference, tight OS integration, and vast app ecosystems. Google’s Pixel line, Samsung’s Galaxy portfolio, and forthcoming Snapdragon 8-series flagships all court developers with NPUs and SDKs. Startups like Gaia must therefore differentiate on trust, ownership, and economics: who decides where your data lives, which models run by default, and who gets paid when your device contributes to AI workloads. In that frame, “AI sovereignty” is not rhetoric but product posture.

Risks and realities

Decentralized AI networks are not immune to operational risks. Bittensor’s rise shows appetite for distributed model markets, but the ecosystem has also weathered security incidents and turbulent market cycles. For handset-native nodes, attack surfaces expand to include consumer devices, firmware, and wallets. If Gaia can harden its runtime, sandbox agents effectively, and keep user keys safe, the phone-as-node idea becomes far more defensible.

Battery life and thermals remain practical constraints for on-device LLMs, even with aggressive quantization. Qualcomm, MediaTek, and Samsung have shown credible demos and toolchains, but developers still choose their spots—summarization, transcription, multimodal perception—while leaving open-ended chat and heavy reasoning to larger models. Expect Gaia to steer users toward tasks that shine locally and to lean on the network for spikier workloads.

Bottom line: a sovereignty stress test for the AI phone era

Gaia is surfacing a question incumbents avoid: if phones can run good models locally, why export anything to the cloud by default? The company’s answer is a synthesis—keep personal intelligence on the device, then let users choose when to rent out compute to a peer network that pays them back. If the demos at KBW match the rhetoric, and if early buyers report that the phone behaves like a flagship first and a node second, Gaia might have found a durable wedge into a market that will ship hundreds of millions of AI-capable handsets this year. If not, it risks joining a crowded shelf of ambitious experiments that underestimated the smartphone baseline.

Either way, the direction of travel is clear. AI is coming to the phone, not just through it. The firms that win the next cycle will prove not only that their models are smart, but that their defaults respect sovereignty, hold up under real-world constraints, and share the economic upside with the people doing the inference.

Read Also: Camp Network Promises On-Chain Fix for IP Licensing in AI Economy, L1 Mainnet Goes Live

Disclaimer: This article is for informational purposes only and does not constitute investment advice.